Overview

An obstacle-avoiding, path-finding AI system using Elman Recurrent Neural Networks and lidar-based input simulation.

This project focuses on solving the classic problem of obstacle avoidance and path planning for autonomous mobile robots using Recurrent Neural Networks (RNNs). We implemented a simple Elman RNN architecture that uses lidar-based simulated sensor data to train the robot on how to navigate through a grid environment containing obstacles.

Highlights

- 182 total input features: 180 lidar sensor values + 2 positional values

- Custom Elman RNN with feedback loop on the third hidden layer

- Softmax-based decision making: Forward, Backward, Left, Right

- Dynamic training set generated from map and path planning samples

- Trained using backpropagation with a cost function based on log-probability

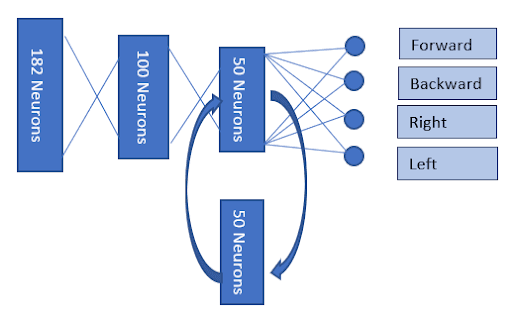

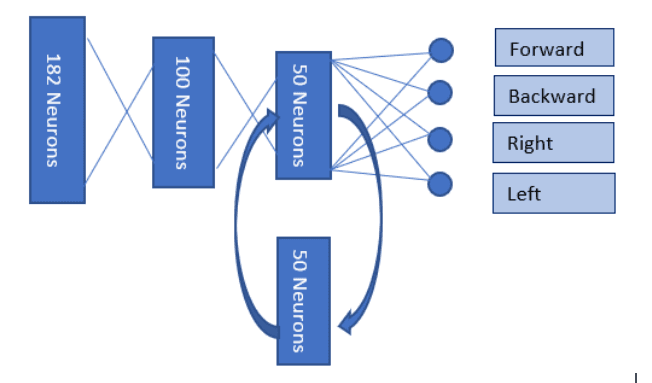

Architecture

- Input Layer: 182 neurons

- Hidden Layer 1: 100 neurons (sigmoid)

- Hidden Layer 2: 50 neurons (ReLU with Elman recurrent memory)

- Output Layer: 4 neurons (softmax decision)

The RNN layer is implemented by connecting the third layer with its past state using a hidden state update. The network is trained across 200 iterations on a dataset generated using simulated maps and paths.

Key Code Snippets

1. Simulating Lidar Sensor Readings

def lidarValues(Position, mapArray):

lidarRange = 360

distanceMatrix = []

startangle = 0

for theeta in range(0, lidarRange, 2):

angle = startangle + theeta

distanceMatrix.append(distance(Position, angle, mapArray))

return distanceMatrix2. Generating Training Data

def createTrainingSet(mapArray, Forward_path, initialPosition):

Xx, Yy = [], []

for m in range(len(Forward_path)):

Xx.append([])

Yy.append([])

curr_position = initialPosition

for i in range(len(Forward_path[m])):

l_values = lidarValues(curr_position, mapArray[m])

Xx[m].append(l_values + curr_position)

Y = decode_Output(Forward_path[m][i])

Yy[m].append(Y)

curr_position = nextPosition(curr_position, Y)

return Xx, Yy3. Elman Network Forward Path

def L_model_forward(X, R_prev, parameters, nn_architecture):

forward_cache = {}

A = X

number_of_layers = len(nn_architecture)

forward_cache['A0'] = A

for l in range(1, number_of_layers):

A_prev = A

W, b = parameters['W'+str(l)], parameters['b'+str(l)]

activation = nn_architecture[l]['activation']

if l == RNN_layer:

U = parameters['U'+str(l)]

Z, A = linear_activation_forward(A_prev, W, b, activation, U, R_prev)

else:

Z, A = linear_activation_forward(A_prev, W, b, activation)

forward_cache['Z'+str(l)] = Z

forward_cache['A'+str(l)] = A

AL = softmax(A)

return AL, forward_cache4. Training the Network

trained_parameters = L_layer_model(

Xx, Yy, nn_architecture, RNN_layer,

learning_rate=0.0075,

num_iterations=200,

print_cost=True

)5. Testing on Maps

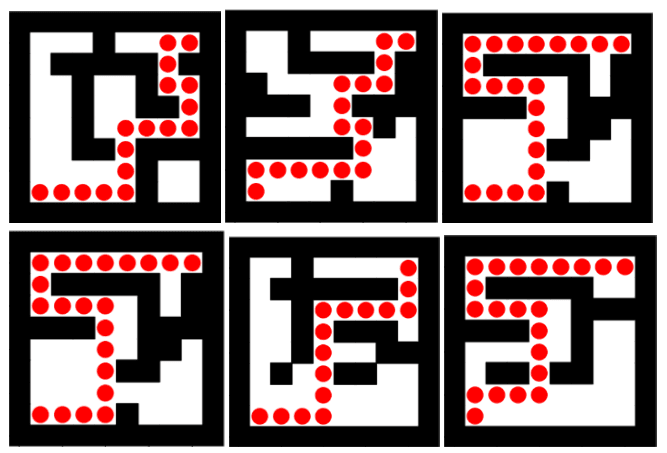

for maps in mapArray:

testMapPlotValues(initialPosition, trained_parameters, nn_architecture, maps)

plot.show()This approach shows the potential of using simple RNNs for embedded AI in pathfinding problems, especially in structured indoor navigation scenarios.

Project Articles

Building a neural net that learns to navigate using lidar input and Elman-style memory.

Using synthetic lidar data to train a mobile robot for neural-based decision making.